Google recently released the TensorFlow 1.0 candidate, the first stable release will be a milestone in the development of the deep learning framework. Since TensorFlow was officially open sourced at the end of 2015, it has been more than a year since then, and TensorFlow has been a surprise. In the past year or so, TensorFlow has evolved from a new star into the depth of the framework war, becoming the industry's de facto standard for near monopoly. This article is excerpted from the second chapter of TensorFlow.

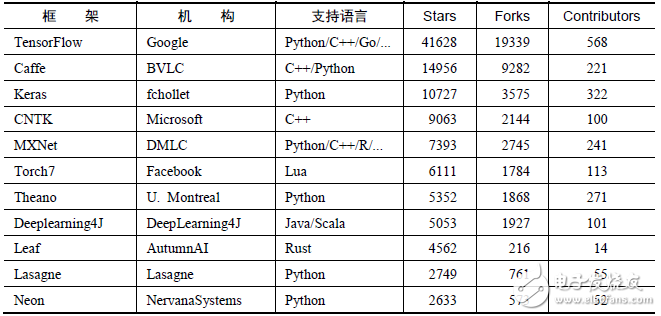

Mainstream deep learning framework comparisonThe enthusiasm for deep learning research continues to grow, and various open source deep learning frameworks are emerging, including TensorFlow, Caffe, Keras, CNTK, Torch7, MXNet, Leaf, Theano, DeepLearning4, Lasagne, Neon, and more. However, TensorFlow has taken the lead and has an absolute advantage in both attention and number of users. Table 2-1 shows the statistics of each open source framework on GitHub (data statistics on January 3, 2017). You can see that TensorFlow beats other opponents in terms of the number of stars, the number of forks, and the number of contributors. .

The reason is mainly because Google’s appeal in the industry is really strong. There have been many successful open source projects before, and Google’s powerful level of artificial intelligence research and development has made everyone confident in Google’s deep learning framework, so that TensorFlow is in 2015. In the first month of open source in November, it accumulated 10,000+ stars. Secondly, TensorFlow does have excellent performance in many aspects, such as the simplicity of the code designing the neural network structure, the execution efficiency of the distributed deep learning algorithm, and the convenience of deployment, which are the highlights of its success. If you keep paying attention to the development progress of TensorFlow, you will find that there are more than 10,000 lines of code updates and tens of thousands of lines per week for TensorFlow. The product's superior quality, rapid iterative update, active community and positive feedback form a virtuous circle, and it is conceivable that TensorFlow will continue to dominate the various deep learning frameworks in the future.

Table 2-1 Statistics of various open source frameworks on GitHub

Observing Table 1 also reveals that giants such as Google, Microsoft, and Facebook are participating in this deep learning framework war. In addition, Caffe, who was graduated from the University of Berkeley, led by Ca Yang, developed by the Lisa Lab team at the University of Montreal, and others. A framework for contributions from individuals or business organizations. In addition, you can see that the mainstream frameworks basically support Python. At present, Python can be said to be the leader in the field of scientific computing and data mining. Although there are competitive pressures from languages ​​such as R and Julia, Python's various libraries are really perfect. Web development, data visualization, data preprocessing, database connection, crawling, etc. are all omnipotent and have a perfect ecological environment. Only in the data mining tool chain, Python has NumPy, SciPy, Pandas, Scikit-learn, XGBoost and other components, which is very convenient for data acquisition and preprocessing, and the subsequent model training phase can be compared with Python-based depth such as TensorFlow. The learning framework is perfectly connected.

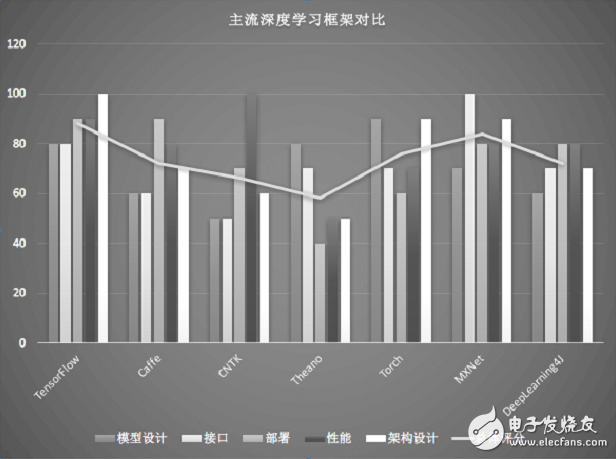

Table 2-1 and Figure 2-1 show the ratings of the mainstream deep learning frameworks TensorFlow, Caffe, CNTK, Theano, and Torch in various dimensions. The detailed learning framework is described in detail in Section 2.2 of this book.

Table 2-2 Ratings of the mainstream deep learning framework in each dimension

Figure 2-1 Comparison of mainstream deep learning frameworks

Introduction to each deep learning frameworkIn this section, let's take a look at the similarities and differences between the current popular frameworks, as well as their respective characteristics and advantages.

TensorFlow

TensorFlow is a relatively high-level machine learning library that allows users to easily design neural network structures without having to write C++ or CUDA code for the most efficient implementation. It supports automatic derivation as well as Theano, and the user does not need to solve the gradient by backpropagation. The core code is written in C++ like Caffe. Using C++ simplifies the complexity of online deployment, and allows devices with tight memory and CPU resources to run complex models (Python will consume resources and execute low efficiency). In addition to the C++ interface of the core code, TensorFlow also has official Python, Go and Java interfaces, which are implemented by SWIG (Simplified Wrapper and Interface Generator), so that users can experiment with Python in a machine with better hardware configuration. The model is deployed in C++ in an embedded environment with tight resources or in an environment that requires low latency. SWIG supports the provision of interfaces for various languages ​​in C/C++ code, so interfaces to other scripting languages ​​can be easily added in the future via SWIG. However, one problem that affects efficiency when using Python is that every mini-batch is fed from Python to the network. This process may have an impact when the amount of data in mini-batch is small or the computation time is short. Big delay. Now TensorFlow also has unofficial interface support for Julia, Node.js, and R.

Air fryer,High Quality Air fryer,Air fryer Details, CN

Ningbo ATAP Electric Appliance Co.,Ltd , https://www.atap-airfryer.com