Following AlphaGo's 4:1 victory over Li Shishi last year, the mysterious chess master who has swept the Go game on the line, challenged Go and won 60-game winning streak in the last week, finally unveiled the official version of AlphaGo, artificial intelligence. Panic is also pervasive of social networks. In Xia Yonghong, who studies cognitive and spiritual philosophy, although AlphaGo's algorithm design is very subtle, it is still based on violent statistical operations on big data, which is completely different from the operation of human intelligence.

"Thinking is a function of the immortal soul of mankind, so no animal or machine can think."

"The consequences of machine thinking are terrible. We hope and believe that machines can't do this."

"Gödel's theorem shows that any form of system is incomplete, and it always faces problems that cannot be judged by itself, so it is difficult for machines to transcend people's hearts."

“The machine has no phenomenal experience, it has no thoughts or feelings.â€

"The machine doesn't have the same colorful power as people."

"The machine can't create anything new. All it can do is what we know how to order it to execute."

"The nervous system is not a discrete state machine, the machine can't simulate it."

“It is impossible to formalize all the common sense of guiding behavior.â€

"Man has the power of telepathy, and the machine does not.

â€

â€

These objections to artificial intelligence (arTIficial intelligence, hereinafter referred to as AI) were first listed by AI pioneer Alan Turing in his famous paper "Computers and Intelligence" (1950). Although Turing has already made a preliminary rebuttal to them, almost all subsequent rebuttal arguments against AI can find their prototypes from these viewpoints. Since the birth of AI, all kinds of doubts and criticisms about it have not stopped for a moment.

However, after Alphago defeated Li Shishi in March last year and recently became the top master of China, Japan and Korea after the Masters, these views seem to have disappeared. There are not many people who doubt that AI will go beyond human intelligence in a so-called singularity. The only controversial thing is when singularity comes. Even those who are hostile to AI do not doubt the possibility of singularity. The only concern is that human beings may be eliminated by AI. However, this blind optimism may irresponsibly hurt the future development of AI – the higher the expectations, the greater the disappointment, and the lack of a rigorous review of the current AI – if we understand how Alphago works, we will find It still shares the philosophical challenges faced by all AIs.

First, why is artificial intelligence not smart?

Many of the critiques enumerated by Turing have evolved into more elaborate arguments later. For example, the critique based on Gödel's theorem was later developed by the philosopher Lucas and the physicist Penrose; the problem of the incomprehensibility of common sense later appeared in the framework and common sense issues; the machine only mechanically acted according to the rules, and Being unable to think on their own is the core of the concern of the later Chinese house arguments and symbolic foundations; the machine has no phenomenal awareness experience, and is the main point of view of AI for those spiritual philosophers who advocate first-person experience and sensibility.

(1) The framework problem is one of the most serious problems that plague AI, and it has still not been effectively solved. The original paradigm of AI was symbolism, which symbolized the world based on symbolic logic. The framework problem is a problem inherent in the characterization process of AI. Cognitive scientist Dennett cited such an example to describe the framework problem: we issued instructions to the robot: enter a room with a time bomb and take out a spare battery inside. However, since the explosives are in a small car with the battery, the robot cart will also push out the explosives when the battery is taken out. As a result, the explosives exploded... We can let the robots perform the side effects caused by an action to avoid such accidents. So, after the robot enters the room, it begins to calculate whether the color of the room wall will change after the car is launched, will it change the wheel of the car... It does not know which results are related to its target and which are not. Just as it fell into infinite calculations, the explosives exploded... We made improvements to the robot again, teaching him to distinguish which side effects are related to the tasks and which ones are not relevant, but what is relevant and what is irrelevant in this robot. At the time, the explosives exploded again.

When a robot acts on the outside world, something in the world can change, and the robot needs to update the internal representation. But what changes things, things don't change, and the robot itself doesn't know. This requires setting up a framework to specify the relevant items of change. But on the one hand, this framework is too cumbersome on the one hand, and on the other hand it depends on the specific situation and thus more complicated, and will eventually go far beyond the load of the computer. This is the so-called framework issue.

This problem is often associated with another problem in AI characterization, such as common sense issues. We all know the so-called Asimov's robotic trilogy that robots must not harm humans (humans must try to save them when they are in danger), and must obey the commands given to them to protect their survival as much as possible. But in fact, these three laws are difficult to exist as instructions for robots, because they are not instructions that are well-defined and can operate effectively. For example, the law of saving people has different means of execution in different situations. When a person hangs, the way to save him is to cut the rope; but when a person pulls a rope down the window of the five-story building for help, the way to save him is to pull up instead of cutting the rope. Therefore, if people are allowed to work, they must formalize a large amount of background knowledge. It is a pity that the expert system and knowledge representation engineering in the second wave of AI in the 1980s failed because they could not deal with the problem of common sense representation.

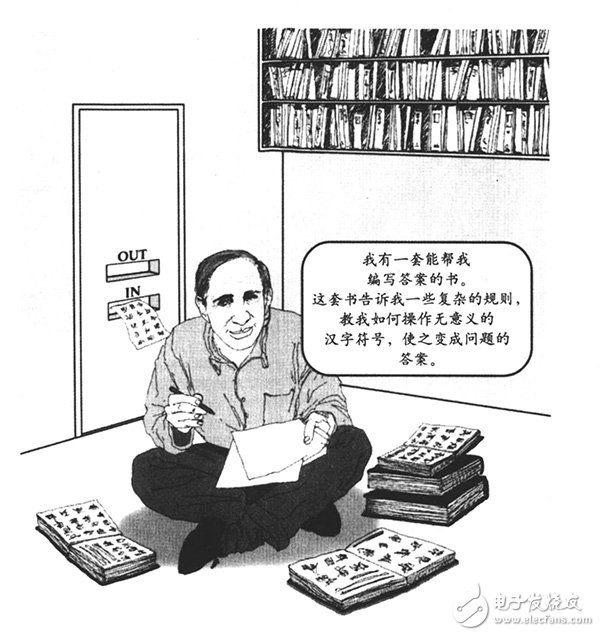

(2) Another problem with AI is the Chinese house argument and the symbolic foundation for the resulting symbol. The spiritual philosopher Searle designed such a thought experiment. He assumed that he was locked in a closed house. There is an English instruction manual in the house, which describes how to give a Chinese according to the glyph of the Chinese character (not the semantics). The Chinese answer corresponding to the question. Searle in the Chinese house receives the Chinese question from the window, and then gives the corresponding Chinese answer according to the English manual. From the outside of the Chinese house, it seems that Searle knows Chinese. But in fact, Searle does not understand any meaning of these Chinese questions and answers. In Searle's view, the digital computer is similar to the Sear in the Chinese house. It only processes the symbol strings according to the physical and syntactic rules, but does not understand the meaning of these symbols at all. Even though computers exhibit intelligent behavior similar to humans, the work of computers is ultimately the processing of symbols, but the meaning of these symbols is not computer-understood or autonomously generated, but depends on them in the human mind. significance.

Later, on the basis of Searle, cognitive scientist Hanard proposed the so-called symbolic foundation problem: how to make an artificial system autonomously produce the meaning of symbols without the external or pre-assignment of human beings. This problem is actually how to let the AI ​​autonomously identify the feature quantity from the world, and finally autonomously generate the symbol corresponding to the feature quantity. "Deep learning" attempts to solve this problem, but its solution is not so satisfactory.

(3) The phenomenon of phenomenon awareness is also a problem of artificial intelligence. The framework problems and symbolic foundations mentioned above actually involve how to simulate human representation activities in a formal system. But even if these characterization activities can be simulated by artificial intelligence, whether human consciousness can be restored to the characterization process is also a controversial issue. The representational theory of consciousness believes that all processes of consciousness can be reduced to the process of representation, but for those spiritual philosophers who are born or sympathetic to the phenomenological tradition, consciousness encompasses the subjective consciousness experience that cannot be eliminated in the human mind. We generally refer to this first-person experience as sensation or phenomenon awareness. The spiritual philosopher Nagel wrote a famous article, "What is the experience of becoming a bat?" In his opinion, even if we have all the neurobiology knowledge about bats, we still can't know exactly the bats. Inner consciousness experience. There is a similar assumption in Chalmers. For example, we can assume that there is such a zombie, and all its activities behave like human beings. However, it lacks the most essential phenomena of human consciousness. In their view, the conscious experience cannot be simulated by the characterization process. If this theory is established, representation and consciousness are two different concepts. Even if strong artificial intelligence is possible, then it does not necessarily have consciousness.

Second, is Alphago really smart?

So, is Alphago really revolutionary and a milestone in the development of AI? In fact, Alphago does not adopt any new algorithms, thus sharing the limitations of these traditional algorithms.

Alphago's basic design philosophy is to evaluate the board position and determine the movements by constructing two neural networks, the decision network and the value network, based on the two modes of supervised learning and reinforcement learning. Deepmind's engineers first used supervised learning to train a strategy network based on a large number of human game data, which can be learned from the chess players' walking styles. But learning these formulas can't be a master. You also need to evaluate the game after the move to choose the best move. To this end, Deepmind adopted a method of reinforcement learning, and continuously self-played according to the previously trained strategy network (the data of the human chess game is far from enough), and trained a strategy network of reinforcement learning, whose learning goal is no longer analog. The way human chess players walk, but learn how to win chess. Alphago's most innovative part is that it trains a valuation network based on these self-game data to assess the pros and cons of the entire disk. When playing against humans, Alphago applied the Monte Carlo search tree to integrate these neural networks. First, the strategy network can search for various moves, and then evaluate the network to evaluate the winning percentage of these faces and ultimately decide how to move. Compared with the traditional simple violent search, because the strategy network forms a fixed method, the valuation network evaluates and subtracts these moves, which can greatly reduce the width and depth of the search.

Compared to traditional AI, deep learning in recent years, and intensive learning revived by Alphago, they have demonstrated the ability to continuously learn from sample data and environmental feedback in human intelligence. But overall, although Alphago's algorithm design is very subtle, it is still based on violent statistical operations on big data, which is completely different from the operation of human intelligence. Alphago played tens of millions of games and statistically analyzed these situations before getting the same chess power as humans. But a talented player can achieve the same level of chess, only need to play a few thousand games, less than one in ten thousand of Alphago. Therefore, Alphago's learning efficiency is still very low, which shows that it still does not touch the most essential part of human intelligence.

More critically, deep learning is still not immune to the theoretical problems that plague traditional AI. For example, the robot's frame problem requires real-time characterization of the complex and dynamic environment in which the robot is located. Applying deep learning today can be a difficult task. Because of the field in which deep learning applies, it is still limited to the processing of large sample images and voice data. The accompanying consequences of an action may be such that the data is highly context dependent and difficult to exist in the form of big data, so it is impossible to train the robot with big data. Ultimately, it is very difficult to generate a neural network with the belief in human common sense, and the framework problem is still difficult to solve.

In addition, deep learning requires constant adjustment of parameters during training to obtain the desired output due to the large number of training samples required for implantation. For example, Alphago's supervised learning training strategy network requires human chess as a training sample, and manual setting of characteristic parameters is also required during training. Under such circumstances, the correspondence between the neural network and the world is still artificially set, rather than autonomously generated by the neural network. Deep learning can't completely solve the problem of symbolic foundation.

Third, it is better to be wary of philosophers than to be alert to artificial intelligence.

Compared to other fields of engineering, AI may be one of the most closely related disciplines. In the history of artificial intelligence philosophy, many philosophers have tried to use some alternative intellectual resources to improve the technical solutions of artificial intelligence. In this process, philosophy also plays the role of the burdock. By constantly clarifying the essence of human intelligence and cognition, it examines the weaknesses and limits of AI and ultimately stimulates the study of AI. Among all philosophers, the authors cited by artificial intelligence researchers are probably Heidegger and Wittgenstein.

As early as in the AI ​​symbolism era, the American Heidegger expert Dreyfus criticized the AI ​​at the time. No matter how complex the AI ​​algorithm can be, it can be attributed to symbolizing the world with symbolic logic or neural networks, and then planning actions based on efficient processing of these representations. However, this is not entirely consistent with human behavior patterns. According to Dreyfuss, a large number of human behaviors do not involve characterization, and actors interact directly with the environment in real time, and do not need to map the changes in the mind to plan actions. Later, Brooks of MIT adopted this "no-characteristic intelligence" solution (although Brooks did not recognize the impact of Dreyfus on him, but according to Dreyfus, this idea originated from a student elect in Brooks Labs. In his philosophy class, he designed a robot "Genghis" that can respond to the environment in real time.

In addition to Heidegger, Wittgenstein is also another storm center of AI criticism. Wittgenstein taught a mathematics-based course in Cambridge around 1939, and the artificial intelligence pioneer Turing, which we mentioned at the beginning, just took this course. Later, a scientific novel "Cambridge Quintet" arranged two people to talk about whether the machine can think about it. Some of the material was taken from the arguments of the two in class. In Wittgenstein's view, both humans and machines act in accordance with certain rules. However, rules are constitutive to machines because their operation must depend on rules, but for humans, compliance Rules mean consciously obeying it. But Wittgenstein's greatest influence on AI is his late theory of language. Wittgenstein believed in the early days that language is a collection of propositions that can be described by symbolic logic, and the world is also composed of facts. Thus, propositions are logical images of facts, and we can describe the world through propositions. This idea is completely isomorphic with the concept of AI. However, Wittgenstein gave up these ideas in the late stage. He believed that the meaning of language does not lie in the combination of basic propositions, but in its usage. It is our use of language that determines its meaning. Therefore, trying to establish a fixed connection between symbols and objects like traditional AI is futile, and the meaning of language can only be established in its use. Based on this concept, some AI experts such as Steele use it to solve the problem of symbolic foundation. He designed such a robot population. One of the robots, after seeing an object such as a box, randomly generates a symbol string such as Ahu to represent it. Then, it transmits the symbol Ahu to another robot, let it guess, see Which object corresponds to Ahu, if the robot correctly points out the box corresponding to Ahu, it will give it a correct feedback. Thus, the two robots obtained a vocabulary representing Ahu. Steele calls this process an adaptive language game. By continually doing this kind of game, the robot population can get a linguistic description of the world around them, thus making the meaning of the symbol autonomously based on the world.

However, these approaches have always been marginal in the history of AI, because the technical resources they rely on are too simple. To fully simulate the human body and the world of life, the difficulty is even more difficult than the traditional AI formal system. world. But if Heidegger and Wittgenstein's understanding of the nature of human intelligence is correct, then future AI will inevitably need a tailored and distributed approach. For example, give AI a body that allows it to directly capture feature quantities from the environment (rather than training data), allowing it to learn common sense and language that guides human action in interactions with the environment and other actors. This may be the only way to the general AI.

However, this kind of universal AI may also be the way to eliminate human beings. Because as long as AI has its own history, the surrounding world and the form of life, it can finally get rid of human training and feedback incentives, and have its own desires and goals. Once it has its own desires and plans its own actions based on this desire, in its constant adaptation and adjustment to the environment, it will enter the path of evolution and become a new species. If human beings and their conflicts and competition in existence, due to the limited function of human beings, they are likely to face the fate of being eliminated.

Therefore, from a philosophical point of view, what we are worried about is not that AI studies ignore the arguments of artificial intelligence (potential) opponents such as Heidegger and Wittgenstein, because a dedicated weak AI is a good AI, and we are more worried about it. It is the AI ​​researchers who have adopted their views and combined the current deep learning and reinforcement learning with physical robotics.

AlphaGo is not terrible. The terrible thing is that it has its own body, consciousness, desire and emotion.

Computer Guillotine Battery Holder

CR2032 Button Battery Holder,Battery Holder Socket Terminals,Battery Holder,Half circular battery holder,Coin Cell Battery Box

Dongguan Yiyou Electronic Technology Co., Ltd. , https://www.yiucn.com