“Surface†was once used as a term for people to meddle with each other and is now deeply integrated into our lives. From mobile phones that can be unlocked by human faces to face recognition and punching machines, and even subway “sweeping faces†to enter the station...

Face recognition technology is increasingly used in a variety of authentication scenarios. Behind this seemingly application between electro-optics and flint, what are the less obvious technologies that make accurate discrimination? What kind of method does the algorithm use to resist all kinds of fraud attacks?

We recently invited Peng Jianhong, Director of Product Technology, who is responsible for the product design of the FaceID online authentication cloud service. In this open class, he talked about the application of deep learning in Internet identity authentication services, as well as the application scenarios and actions of face recognition in vivo detection (action, colorful, video, and silence).

The following is a record of Peng Jianhong’s public class lectures:

Today we are mainly talking about FaceID, which is more like a solution in our product matrix and is a financial-grade solution for authentication. There are many scenes in our lives that we want to verify and prove that you are you.

Basically all Internet finance companies will verify that you are you when we are borrowing money. This requires you to be such a proof that you are yours. So how to provide a reliable solution to verify that you are yours has changed It is very important that everyone may easily think that there are many methods for verification, including fingerprint recognition that has been used extensively before, as well as some iris recognitions that often appear in movies, and the recent special face recognition.

I will talk about the technical features below. Regarding face recognition, it is easy for people to think that the first feature is that the experience is very good, very natural and convenient, but it also has many shortcomings. First of all privacy is even worse. We have to pay for the fingerprints and irises of others. But the cost of getting a photo of someone’s face is very small. The second reason is that the stability of face recognition will be lower due to factors such as light, age, beard, and glasses. The third is that fingerprint recognition and iris recognition are all active, and face recognition is passive. This is also the time when iPhoneX just came out. Many people are worried about being mistakenly brushed or mistakenly brushed or retrieved, etc.—weak. Privacy, weak stability, and passiveness put forward higher technical requirements for the commercialization of face recognition.

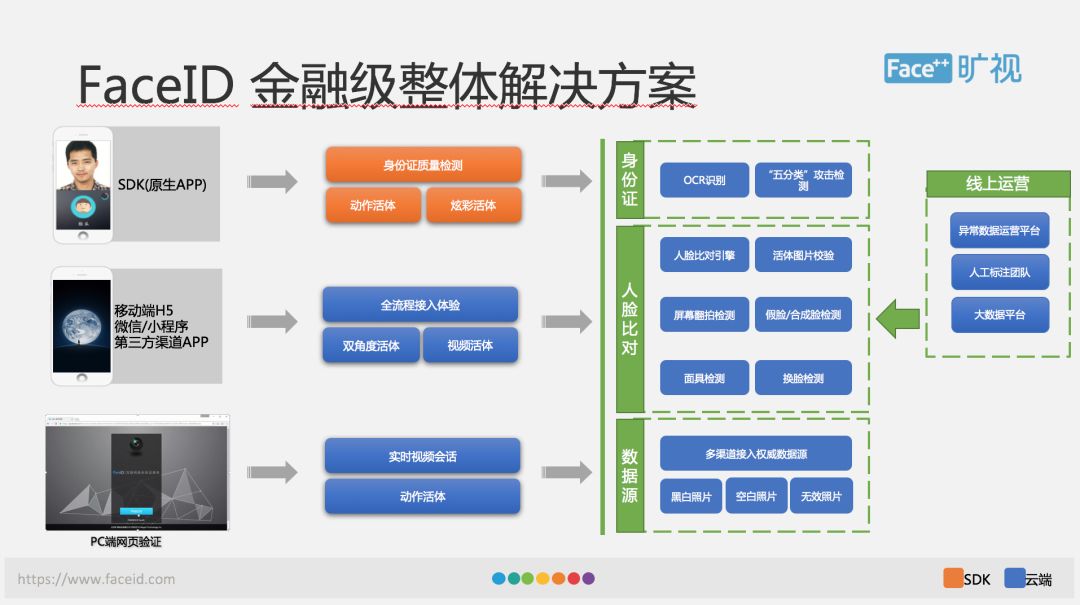

The rapid development of deep learning technology has greatly improved the accuracy of image recognition, classification and detection. However, it is not so simple to create a financial solution. This diagram shows the financial aspects of an overall FaceID. Level overall solution.

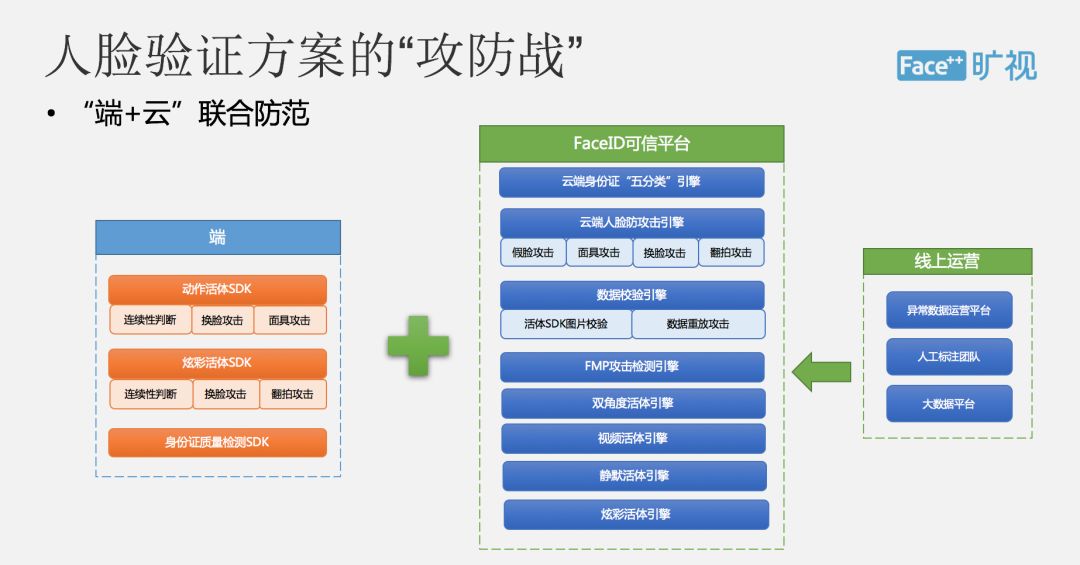

In this architecture diagram, we can see that users of FaceID provide a variety of product forms, including mobile SDK, H5, WeChat/applets, third-party channel APP, and PC. Functionally speaking, our products include the quality inspection of ID cards, OCR identification cards, live detections, attack detections, and face comparisons. The entire solution can be seen as building on the basis of cloud and end-to-end. Providing a UI solution on the end, providing a UI interface can facilitate integration. If we feel that our UI does not meet everyone's requirements, we can also do some customized development. There are live detections in the entire core function, on the end With the cloud have their own implementation.

At the same time, different live strategies are used for different live attack scenarios. In live detection in real life, online operations will collect various pictures in real time to mark them and update the algorithm in time to ensure that the latest attacks can respond and reward in the first time, which is also for our entire depth. Learning algorithms constantly inject new blood.

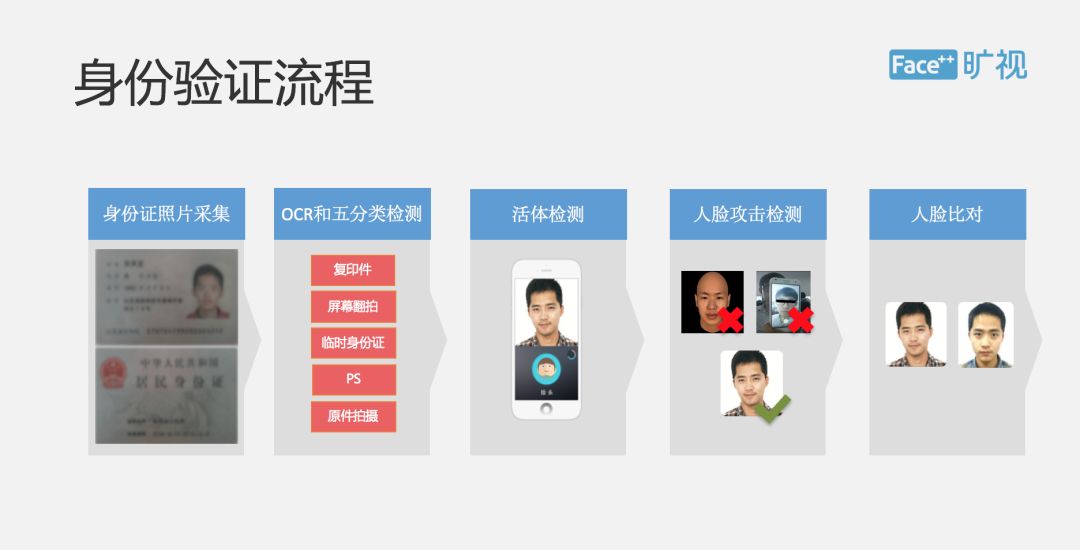

▌ ID card collection

The whole process is such that the user will collect the ID card first. The system will actually ask the user to take a picture of the front and the back of the ID card. This process is done on the end. After shooting, we will be on the cloud, OCR recognition is completed in the cloud, we will not only identify the information on the ID card, but also to identify some of the classification of the ID card. Because different business scenarios are different, this classification information will be fed back to the user, and the user will decide whether to accept it or not. In many serious scenarios, many customers may only accept the original ID card. The identified text will also be processed differently according to the user's business, because some customers will need to recognize the text, and the user cannot go. Modify the ID number and name.

We also added a lot of logical judgments in the OCR. For example, we all know that in the ID number, we can see the user’s birthday and gender information. If we find that the birthday on the ID card is different from the information identified by the ID number, we A logic error will be returned to the user in the result of the API, which can be handled by the user based on the business logic.

This demonstration is the scene of our ID card collection and identity card OCR. The camera is first collected by the mobile phone, and OCR recognition and object classification are performed in our cloud to determine whether it is a true identity card. One question that needs to be discussed is why we put OCR in the cloud, not on the SDK side of the phone. This is mainly a security consideration. If information is hacked, this is a very dangerous thing on the end.

â–ŒLive Attack Detection Scheme

Let's discuss the most important live attack. A variety of live attack detection solutions are provided in our products, including random, dynamic live, including live video, colorful live, and more. Live detection is the most important part of our entire Face ID and our most important core strength. This PPT shows our live action. The user can perform random actions such as nodding and shaking his head according to our UI prompts. Therefore, each random action is sent by the Serves side, which also ensures the safety of our entire movement. There are some technical details, including face quality detection, sensing and tracking of key points on the face, and 3D gesture detection on the face. This is some of the core competitiveness of our entire technology. Then we will help the user define a set of UI interface, if the user thinks that our UI interface is not good, you can directly modify it.

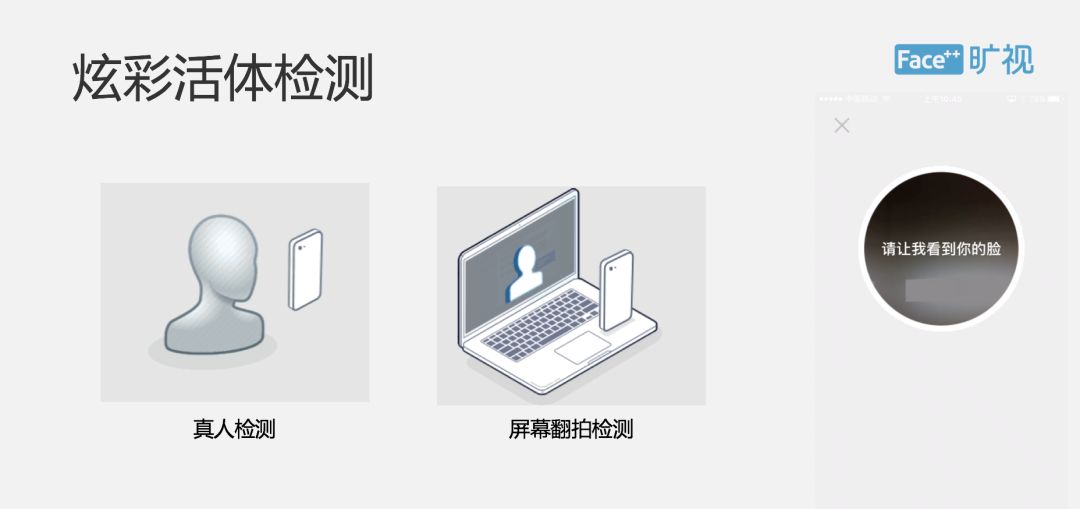

We provide a detection method called Hyun Live, which is a unique and unique method of Face++ that performs live detection based on the principle of three-dimensional imaging of reflected light, and eliminates the use of 3D software to compose videos, screen remakes, etc. from the principle. attack. From the aspect of product form, it is a video itself, which may not be visible now, that is, the screen will send out a specific pattern for living judgment.

One of the bigger problems in living is that when it is under strong light, its quality detection method is not very effective. We will cooperate with a simple point diagram action at the end, which will raise the threshold of the entire attack and then target the mobile H5. The scene we mainly introduced a video live detection method, the user will read a four-digit number based on a number provided by the UI, and we will judge, not only will do the cloud recognition, but also do the traditional aspects of Recognition, and the simultaneous detection of voice and sound between the two.

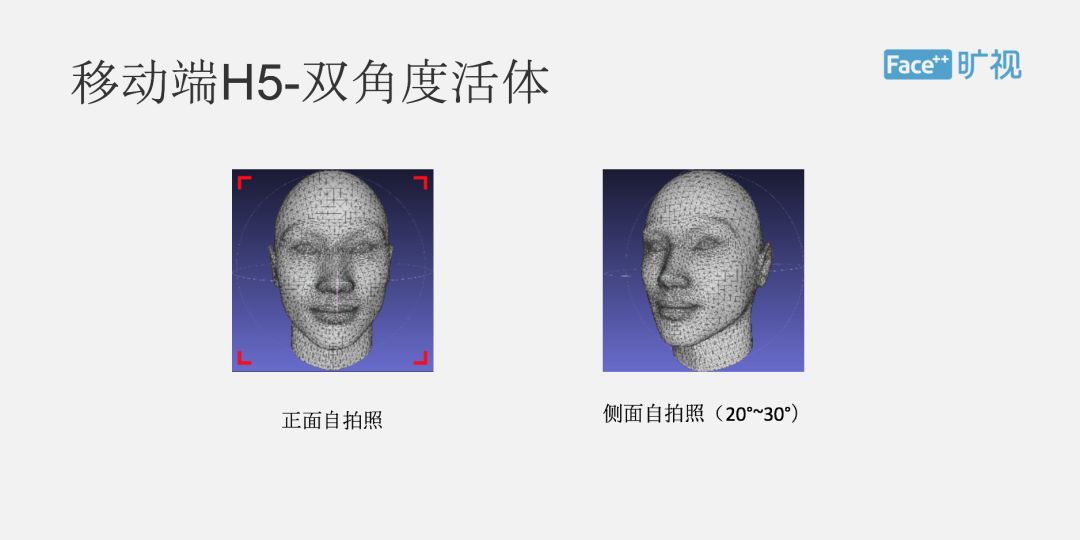

In this way, through these three options to judge is the live detection, in addition to some of the more typical methods we just introduced, we are also trying out some new ones including two-point living and silent living. The dual-angle living body is a user taking a frontal face selfie and a side self-photograph, and the 3D modeling reconstruction method is used to judge whether it is a real person or not. Our dual-angle living body and the silent living body provide a very good user. The user experience is equivalent to the user taking a two second video.

We will pass this video to the cloud, so that we will not only perform live detection of single frames, but also do this kind of correlation between the multi-frame live detection, so that the determination of the tested person through two methods of combination of motion and static Is it real?

In addition to live detection, we also provide a set of attack detection called FMP, which can effectively identify remakes and mask attacks. This is done in our cloud. This is how we train a deep neural network called FMP based on a large amount of face data and perform real-time returns and adjustments based on online data to continuously identify the accuracy rate. This is also one of the most important technologies in our entire live detection. difficulty.

Face to face comparison

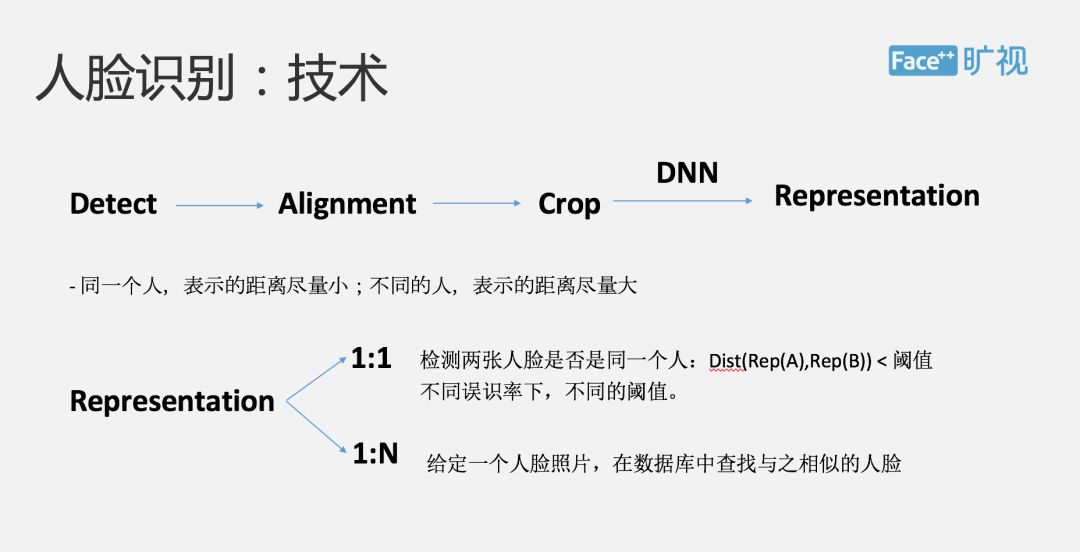

After the living body test, we can perform face-to-face comparisons. I will briefly introduce to you a basic principle of face recognition: First, we will perform face detection and identification from an image. This is equivalent to finding this face in an image and showing the whole person. Some basic key points on the face, such as eyes, eyebrows, and so on.

What needs to be done is to align the key points of some faces. The effect is to provide data preprocessing for subsequent face recognition algorithms, which can improve the accuracy of the entire algorithm. Then, we will pull out the whole part of the face, so that we can avoid the influence of surrounding objects on it. After the completion of the face, the face will go through the deep learning network and eventually generate a thing called the expression. We can understand the expression as A vector generated by this picture is considered to be in machine recognition. This picture is represented by such a vector. But how can this measure the logo truly portray this real face?

We now have a principle: If we are the same person, we hope that the distance between the expressions should be as close as possible. If we are different people, we hope to show the distance as far as possible. This is a good indication that we have to evaluate a deep learning. Bad. Then based on this representation, there are two relatively large applications in face recognition, we are called 1:1 and 1:N respectively.

The former is mainly to compare two face recognition is not the same person, its principle is that we calculate the distance between two human faces. If this distance is less than a domain value, we will think this is the same person, if it is greater than a certain For a domain value, we think it is not the same person. Under different misrecognition rates, we will provide different domain values. The second 1:N application, the main application scenario is security, that is to say we provide a face photo, look for the known in the database, the most similar such a face is a 1:7 application, FaceID mainly The technical scenario of the application is 1:1.

When we identify the user's name and ID number through OCR, and pass live detection, we will obtain an authoritative photo from the Ministry of Public Security's authoritative database, which will be compared with a high-quality photo captured by the user's video. Yes, it will be returned to the user is not consistent, of course, we will not go directly to tell the user is not consistent, but will be informed by this degree of approximation.

We can look at the table on the left, and then the return value on this side provides a similarity of one thousandth, one ten-thousandth, one hundred thousandth, which is the rate of misrecognition. There will be a field value under the misrecognition rate. Suppose we think that if the score is greater than 60 points, we will think that it is the same person, so I found these two photos. The degree is 75. We would say that the same person is one millionth of the misrecognition rate, but at the misunderstanding rate of one in 100,000 they may not be the same person.

There is a detail here. Our photo data source may provide different obstacles throughout the entire time. Normally, we will have a different texture pattern, but sometimes we will get a blank photo or get a Black and white photos, this also requires us to do some back office processing.

So, to sum up, Face ID will provide you with a whole set of authentication solutions. The entire program covers a series of functions such as quality inspection, identification card identification, live detection, attack detection, and face comparison, among which the In terms of detection, we adopted the joint prevention method of Yun Jia Duan. Through a variety of living detection solutions, including a series of detection methods such as action live, video live, and silent live, we can effectively prevent fake face attacks.

Online, we encounter a variety of attacks on a daily basis. The entire face verification program is a long-term offensive and defensive battle. We now continue to collect abnormal data on attacks through online operations, and conduct manual annotation and training. Analysis, and then can continue to enhance the ability to prevent the entire model, in this regard we have formed a closed-loop system, and found that any attack we can in a very short period of time to update some of the models online, to fully prevent.

▌ Industrial AI algorithm production

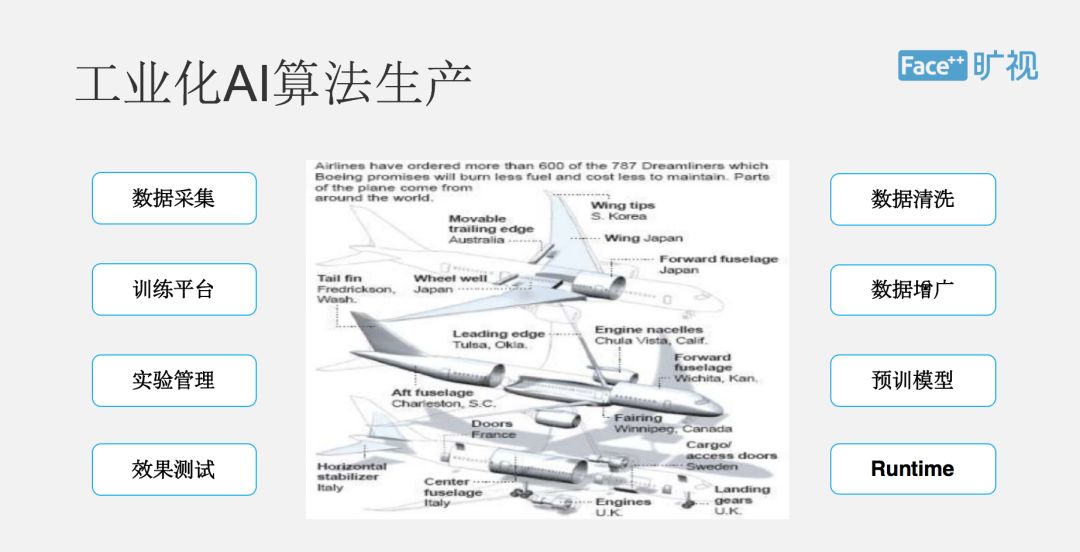

Below I briefly introduce a whole process of industrial AI algorithm generation. In fact, the entire process can be seen as data-driven, including data collection, cleaning, labeling, including data augmentation, data domain training model, time management, and SDK packaging. and many more.

Introduce some key points. The first one is data collection. We use a Team called Data++ to take charge of data collection and labeling. We will collect the data offline, or use the heavy tagging and web crawler methods to obtain the entire data. AI training raw materials.

With the data, our platform called Brain++ can think of it as an elixir for the entire AI chip. It will provide some management of the entire computing storage network and other layers of IaaS, so that our entire algorithm engineer training is equivalent to Single machine to run above, but in different distributed underlying scheduling is on more than one machine, has shielded us through the Brain + + platform, so if we can write similar statements, need 20 CPU, 4 GPU , 8G of memory to run such a training script, the bottom is to train through a distributed approach, but we provide one-way running script on it.

Then in addition to the data, resources of the IaaS layer, we developed a set of parallel computational frameworks similar to TensorFlow and the engine Megbrain. Compared with TensorFlow, many areas have been optimized differently.

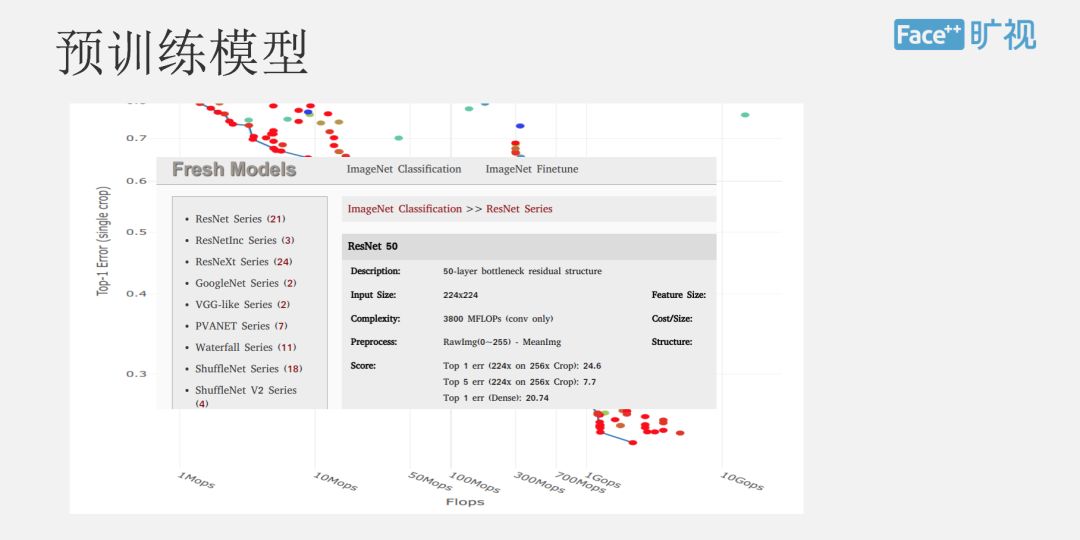

Let's talk about our domain training model. Our team will train tens of thousands of such domain training models. This diagram shows some of the domain training models. Each point in the following diagram is an experiment. It is a good experiment, and we will put it on a website for use by other algorithm engineers. We hope to help them organize the whole time idea through a set of time management platforms, as well as the cyclical relationship of the entire experiment.

With these Face Models, IaaS layer resources, data, and time management, the rest of us have to use the imagination of each algorithm engineer. Everyone will read various papers every day to think about all kinds of complex and essential This kind of network design scheme thus creates a very good network model. Therefore, industrialized AI production is now a group operation. We will have various system support. Everyone should go to these existing resources to create a complete AI system.

A slip ring is an electromechanical device that allows electricity and data to pass through a rotating assembly. A mercury slip ring uses liquid mercury as the electrically conductive element inside the rotating assembly, as opposed to traditional carbon brushes. Mercury is a better conductor of electricity than carbon, and it also has a very low contact resistance. This makes it an ideal choice for applications that require high-speed data transmission or where reliability is critical. Mercury slip rings are used in a variety of industries, including medical technology, aerospace, and defense.

Why do we choose a mercury slip ring?

A slip ring is an electromechanical device that allows electrical current to pass between rotating objects. Slip rings are often used in applications where a cable or connector would otherwise twist and tangle as the object rotates. There are many different types of slip rings, but one of the most common is the mercury slip ring. Mercury slip rings offer several advantages over other types of slip rings, including high reliability, low maintenance, and long life. Here are three reasons why we choose a mercury slip ring:

1. Reliability: Mercury is an extremely reliable material, and mercury slip rings are among the most reliable types of slip rings available. Mercury has a very low failure rate, and it is not affected by changes in temperature or humidity. This makes mercury slip rings ideal for critical applications where reliability is essential.

2. Low Maintenance: Mercury slip rings require very little maintenance. Because mercury is a very inert substance, it does not corrode or generate any corrosive gases that would affect its reliability. Mercury slip rings do not require any lubrication, and they can operate in a wide range of temperatures and environments.

3. Economical Mercury slip rings have a lifetime cost advantage over other types of slip rings as well. Generally speaking, mercury slip rings are approximately 25% more expensive per kilowatt than other types of slip rings. However, because they require less maintenance and have a greater degree of reliability than other types of slip rings, they will save you money over the lifetime of your equipment.

Mercury Slip Ring,Slip Ring Gigabit Ethernet,Slip Ring 400V,Slip Ring Pneumatic

Dongguan Oubaibo Technology Co., Ltd. , https://www.sliprob.com