In view of the current technical bottleneck of deep learning, many academicians and professors including Tsinghua University Zhang Bo gave their own research ideas.

At the just-concluded CCF-GAIR conference, academicians from Tsinghua, University of California, Berkeley, Stanford, Harbin Institute of Technology and other top domestic and foreign universities of science and technology gathered in Shenzhen to share their latest research. Although the subdivisions of their respective research areas are different, through technical presentations throughout, it is inevitable that many academicians are directly or indirectly "criticizing" deep learning algorithms.

In the speech, they again clearly pointed out the shortcomings of deep learning, and then pointed out that in the foreseeable future, with the advancement of research, the current deep learning algorithms will gradually be pulled off the altar.

However, following the academic circle into industrial applications, you will find that the focus of the industry is on the implementation of technology. The so-called landing is essentially the aggregation of countless application scenarios. Therefore, for AI companies, business exploration and the use of appropriate technology to solve practical problems are the first to bear the brunt.

Therefore, deep learning has shortcomings. This problem will not hinder the unstoppable development of AI in the short term. The limitations of technology do not mean that AI companies will have nothing to do.

However, Yangchun Baixue's research has always led the technological trend of the AI ​​industry and is also a key driving force for corporate profitability and industrial transformation.

Therefore, at a time when deep learning is over-hyped, we should try to stand on the "shoulders" of academicians and look farther. While keeping their feet on the ground, entrepreneurs should also not forget to look up at the stars.

Although this is not a new topic, there has been no solution to the problem in the industry. This article aims to convey some new ideas provided by academic researchers.

The ills of machine learning-from a misunderstanding of "big"At the moment, the most frequently mentioned terms are machine learning, deep learning, and neural network. Use the concepts in the set of mathematics to understand the relationship between these three, and the relationship between them is in turn, that is, machine learning includes deep learning. , Deep learning includes neural networks. Among them, a neural network with more than four layers can be called deep learning, and deep learning is a typical machine learning.

In the 1950s, the neural network algorithm structure appeared. At that time, its official name should be called the perceptron, but it already included the classic general structure of the input layer, the hidden layer, and the output layer. The deeper the number of layers, the more accurate the description of things.

However, a neural network is an input-oriented algorithm, so high-quality results must depend on data close to the "infinite" magnitude. Therefore, before the Internet revolution broke out in 2000, it had been at a stage where no one was interested.

As everyone knows, the large amount of data accumulated in the Internet era and the substantial increase in computing power brought about by cloud computing have greatly released the potential of deep learning algorithms (deep neural networks), which has also led to the full explosion of the artificial intelligence era. Applications can flourish. Data shows that in 2017, the scale of my country's artificial intelligence market reached 21.69 billion yuan, an increase of 52.8% year-on-year. It is expected that the market size will reach 33.9 billion yuan in 2018.

However, with the maturity of industrial applications and everyone's desire for true "intelligence", the limitations of computing power and deep learning algorithms themselves are undoubtedly revealed.

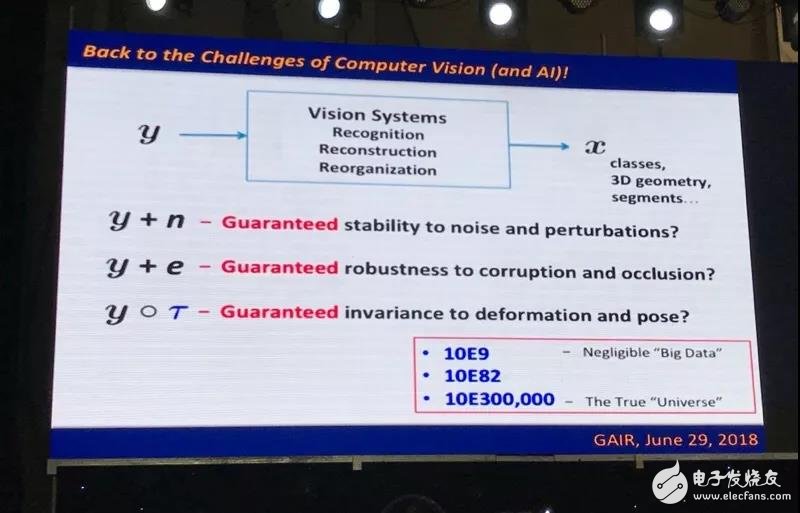

Figure | Ma Yi, Professor, Department of Electronic Engineering and Computer, University of California, Berkeley

"The'big data' in the concept of ordinary people is completely different from what we think of as big data. Take image processing. The amount of billions of data seems to be very high, but for us, it is actually It's a'small sample'. Because the amount of data that can really train a good model should be infinite, so even if you have a lot of data to train the model, there is an essential difference between the ideal intelligent model. "From the nature of the algorithm, Ma Yi, a professor in the Department of Electronic Engineering and Computer at the University of California, Berkeley, also pointed out the limitations of the current hot technology.

Therefore, from academics, investors to AI-headed companies, finding new technologies and directions has become the current focus.

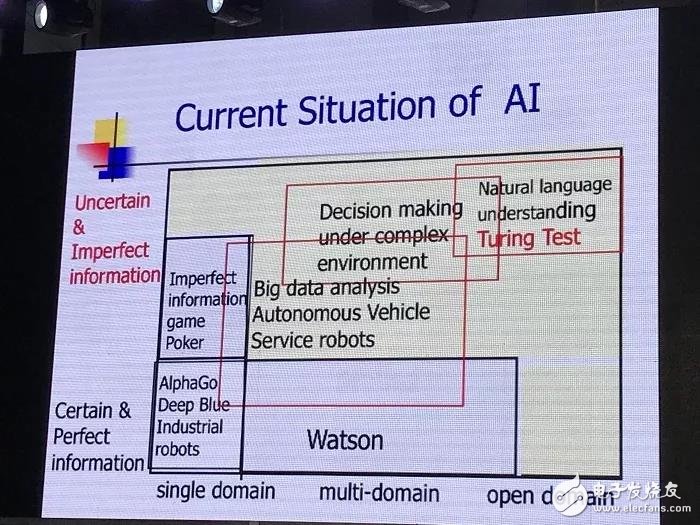

In the opening report of the conference, academician Zhang Bo of Tsinghua University called on everyone to think about "how to move towards true artificial intelligence", which became the keynote of the three-day conference, and it also reflected the demands of everyone in the current stage of the industry's development.

New direction exploration-data processing methods, basic ideas and technical ideas â—Data processing level, semantic vector space may further expand the entranceSeeing the "ceiling" of technology, many experts and scholars have begun to propose the concept of "small data". However, academician Zhang Bo, Dean of the School of Artificial Intelligence of Tsinghua University, does not believe that the amount of data is the fundamental problem at the moment. He pointed out that the traditional The three elements of artificial intelligence will not bring true intelligence.

Figure | Zhang Bo, Dean of School of Artificial Intelligence, Tsinghua University and Academician of Chinese Academy of Sciences

"To evaluate the results of artificial intelligence, we can see from these five things: Deep Blue defeated the human chess champion; IBM defeated the first two champions of the United States in the TV quiz; in 2015, Microsoft did image recognition on ImageNet. , The misrecognition rate is slightly lower than that of humans; Baidu and Xunfei also announced that the recognition accuracy of single sentence Chinese speech recognition is slightly lower than that of humans and AlphaGo defeated Li Shishi. The first two things are classified into one category, and the last three things can be Put it into another category.

Everyone agrees that the three elements for these five things to happen are: big data, increased computing power, and very good artificial intelligence algorithms. But I think everyone has overlooked a factor, that is, all the results must be built in a suitable scenario. "

In other words, the current development of artificial intelligence cannot avoid various constraints, so intelligent machines can only act according to the rules, without any flexibility, and cannot reach the intelligence that people want, and this is the current state of development of AI.

"Our current basic methods of artificial intelligence are flawed, and we must move towards AI with comprehension capabilities. This is true artificial intelligence." Academician Zhang Bo pointed out in his speech.

So what is the solution? Through gradual progress, Academician Zhang gave ideas in his speech and pointed out the technical direction of semantic vector space.

"First of all, it needs to be clear that the reason why the existing machine lacks reasoning ability is that it has no common sense."

Academician Zhang Bo has verified through experiments that the establishment of common sense will indeed greatly improve the performance of the machine. The establishment of a knowledge base for machines has also become the first step for artificial intelligence companies to further improve system performance. "The United States started such a common-sense library project in 1984, and it hasn't been fully completed yet. It can be seen that it is a long way to move towards true artificial intelligence and understanding artificial intelligence."

But even on the basis of establishing a common sense database, it is still not easy to achieve artificial intelligence with the ability to understand. The second step to improve intelligence, in the view of Academician Zhang, is to unify the world of perception and knowledge, and this will bring a qualitative leap in the development of artificial intelligence.

"The reason why deep learning can greatly promote the development of artificial intelligence is that people can convert the acquired scalar data into vectors, which can be used on machines. But so far, the behavior (feature vector) and data ( The combination of symbol vector) is always a difficult point in scientific research, and this limits the machine to become more'intelligent'."

Not only that, from a security perspective, pure data-driven systems also have major problems-poor robustness and susceptibility to great interference. Therefore, under the training of a large number of samples, the system will still make major mistakes. Head companies such as Shangtang and Megvii also stated that even if the accuracy of the trained system model is as high as 99%, in practical applications, the system will still make many "mentally retarded" errors.

"The solution we have come up with now is to project this feature vector space and symbol vector into a space, which we call the semantic vector space."

How to do it?

Academician Zhang pointed out that first, we must use Embedding to turn symbols into vectors, and try to keep the semantics not lost; the second is Raising, which combines neuroscience to elevate the feature space to the semantic space.

"Only by solving these problems, we can establish a unified theory. Because in the past, the processing methods of perception and cognition are different, so the two are not in the same dimension and cannot be handled in a unified manner. But if we can combine perception and recognition By projecting knowledge into the same space, we can establish a unified theoretical framework and solve the problem of understanding in the semantic vector space. This is our goal, but this work is very difficult."

â—Subversion of basic ideas, fuzzy calculation or the future"Whether it is knowledge graphs, semantic vector spaces or other deep learning trainings at the moment, they are all based on probability and statistics theory, while fuzzy logic is not. It is based on fuzzy set theory." Very bold, from the ideological level, the United States Cheng Hengda, a tenured professor of computer science at Utah State University, gave a disruptive idea.

Figure | Cheng Hengda, tenured professor of Computer Department of Utah State University, USA

In fact, fuzzy logic is not a completely new concept. In 1931, Kurt Gödel published a paper proving the "incompleteness theorem" of the formal number theory (that is, arithmetic logic) system, and fuzzy logic was born. In 1965, Dr. LAZadeh of the University of California published a paper on fuzzy sets, marking the first time that humans have successfully described uncertainty with mathematical theories.

"In the current computer field, either 0 or 1, and we describe many uncertain components between 0 and 1. In fact, this process describes the cause of the result. Take two bottles of water as an example, one bottle The probability that “water mark†is pure water is 0.91â€, and another bottle of water mark “water purity is 0.91â€, which one would you choose? Obviously, you would choose the latter. Here is the thinking and judgment process It is fuzzy logic, because the latter’s description of degree is essentially vague."

At present, similar to the classical logic system (derivative disciplines such as calculus, linear algebra, and biology), fuzzy logic has gradually formed its own logic system.

However, no matter how good the technology is, it needs to be combined with the application to show its advantages. Professor Cheng also paid special attention to this aspect, so he chose the field of early diagnosis of breast cancer. "So far, our design samples have been used by more than 50 teams in more than 20 countries around the world."

In Professor Cheng's view, the existing technology has very obvious shortcomings. It is necessary for everyone to settle down to analyze the problem and explore ways to improve it. "Now everyone is simulating electrical signals in brain waves, but in fact there are not only electrical signals, but also chemical reactions in the brain. And the medical image processing that many people are doing is actually image processing, but not medicine. Image processing is very different between them."

â—Technical ideas: from large to simpleAt present, in the face of no progress in technology, the anxiety of AI companies is obvious. Unlike the specific technical ideas given by the academicians and professors above, Professor Ma Yi is more like the "Lu Xun" of the scientific and technological circles. He used the high-quality papers in the speeches in the PPT as examples, just to reawaken everyone's understanding of AI. Thinking.

Figure | Professor Ma Yi's live PPT selection

"Neural network, if the imported data has a small change, the classification will have a big change. This is not a new discovery. In 2010, everyone encountered such a problem, but it has not been solved so far." At the beginning of the speech, Ma Yi took out the "truth" and ruthlessly poured a basin of cold water on many people who were blindly optimistic about AI.

Ma Yi also tried his best to correct the incorrect perception of technology.

"In the field of face recognition, it is a thousand times more difficult to make the algorithm robust than writing an AlphaGo."

"It is said that the bigger the neural network, the better, this is nonsense."

After laughing and swearing, after several years of research, Ma Yi gave his own thinking direction: "The real high-quality algorithm must be the simplest, such as iteration, recursion, and classic ADMM. These simple algorithms are very good. It's also very useful."

ConclusionNext, the development of artificial intelligence technology will not be optimistic, especially the industrial development will enter a period of slack, but this does not mean that the academic and industrial circles will have nothing to do.

Figure | Academician Zhang Bo's site PPT selection

As Academician Zhang Bo pointed out, "We are on the road to real AI, and we are not far from now, near the starting point. But artificial intelligence is always on the road, everyone must be mentally prepared, and this is the charm of artificial intelligence. "

Ncv Detector,Ncv Test Pen ,Detector Ncv,Ncv Voltage Tester

YINTE TOOLS (NINGBO) CO., LTD , https://www.yinte-tools.com