A multi-finger robot realizes grasping by manipulating virtual objects in a simulated environment, and the era of machine learning and cloud services completely changing traditional manual labor is not far from us. In a lab at the University of California, Berkeley, an ordinary robot is picking up oddly shaped objects. Amazingly, robots operate through virtual objects.

The robot has a lot of data on 3D graphics and its gripping skills, and can determine how to grab different items with different grip strength. Berkeley researchers sent the picture to the robot's deep learning system, which connects the robot's arm and the 3D sensor. When a new object is placed in front of the robot, its deep learning system can quickly match it to get the right graphics and gripping skills, and direct the arm how to operate.

It is reported that the grip performance of this robot is the best in history. In the test, when the robot judges that the grip success rate of the object is higher than 50%, it can always lift the object successfully. Although there will be some shaking, the probability of the object falling is only 2%. If the robot judges that the object is difficult to grasp, it will be toggled to make it suitable for grasping, and the grip success rate after adjusting the angle is as high as 99%.

Most of the current robotic arms can only grab "familiar" objects, and researchers often need to provide a lot of exercises for it, which is very time consuming. Berkeley's new invention demonstrates a new approach: using deep learning and cloud services for robotic grip. This innovation avoids a lot of training and increases the applicability of robots in factories and warehouses. Through deep learning, robots can even work in new applications such as hospitals and homes.

Ken Goldberg, a professor at Berkeley University, said that unlike traditional robots that require physics experiments for several months, the robot does not require field exercises. It can learn the three-dimensional graphics, visual appearance, and scratches contained in the dataset in a simulated environment. Grip skills, "training" time is only 1 day.

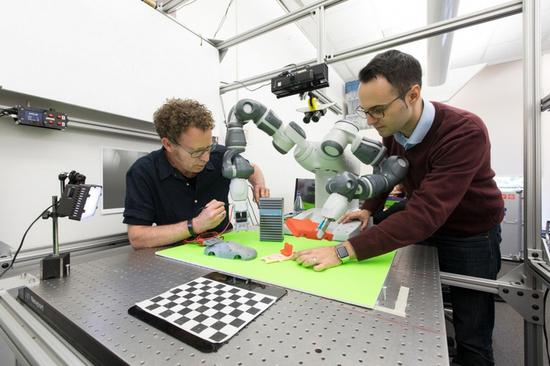

Berkeley Professor Ken Goldberg (left) and Siemens Research Team Leader Juan Aparicio

It is reported that the relevant papers of the research will be published in July this year, when Professor Ken Goldberg and other researchers will publish the 3D dataset used by robots to help promote the development of computer vision technology.

At present, machine vision technology is still in its infancy, and the academic community is extremely lacking in relevant data. The system data set is also urgently needed to be established. Advances in deep learning, control algorithms, and new materials will help build new physics robots, and will open a new era of “machine substitution†with significant economic impact. Although robots have appeared in various warehouses and production lines decades ago, such as Amazon's kiva robots, most of the current robots can only carry items, and the sorting technology of robots is quite awkward.

A laboratory at the Massachusetts Institute of Technology is also doing experiments, and Berkeley’s results impress them. A German company has contacted Berkeley in hopes of commercializing the technology.

The dexterous hands are vital to the development of human intelligence. In the long-term evolution, humans have formed a benign feedback cycle, the vision has become more acute, and the brain power has been greatly enhanced. Although “grip†is simple, it will certainly play a role in the evolution of artificial intelligence.

Power Cabinet,Power Distribution Cabinet,Outdoor Powder,Power Distribution

Guangdong Yuqiu Intelligent Technology Co.,Ltd , https://www.cntcetltd.com